Information

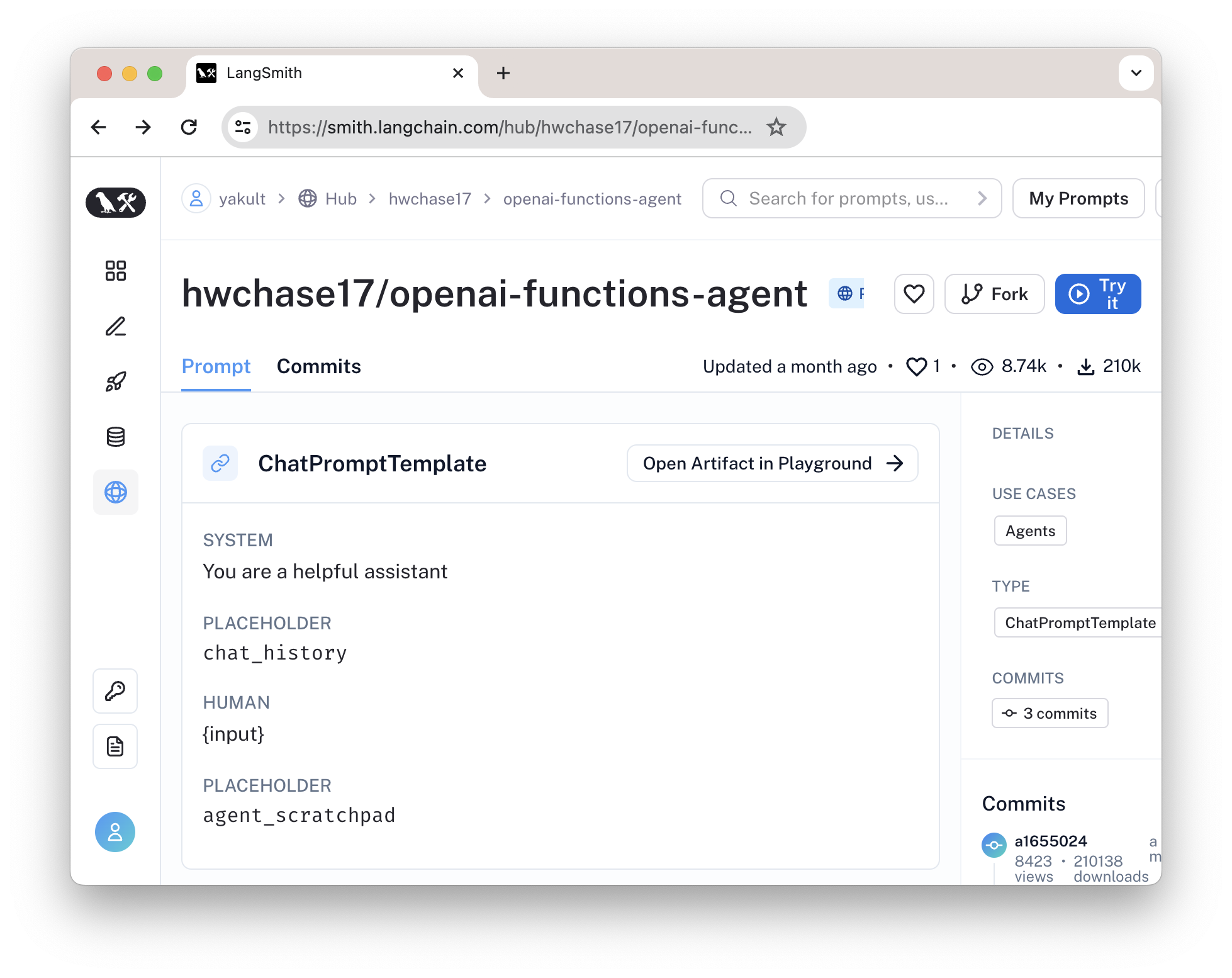

alang.ai Main Navigation Home | 首页 LangChain 101 AI 大模型教程 最新观点 Menu 本节目录 Sidebar Navigation python #@title 配置 Tavily Search API Key,使用Colab中的秘钥 #@title 配置 Tavily Search API Key,使用Colab中的秘钥 # access your Tavily API key # access your Tavily API key tavily_api_secret_name = 'tavily' # @param {type: "string"} tavily_api_secret_name = 'tavily' # @param {type: "string"} # only for this notebook author, please use input in the upside box # only for this notebook author, please use input in the upside box import os import os from google.colab import userdata from google.colab import userdata os.environ["TAVILY_API_KEY"] = userdata.get(tavily_api_secret_name) os.environ[ "TAVILY_API_KEY" ] = userdata.get(tavily_api_secret_name) 1 2 3 4 5 6 7 8 9 10 python from langchain_community.tools.tavily_search import TavilySearchResults from langchain_community.tools.tavily_search import TavilySearchResults search = TavilySearchResults() search = TavilySearchResults() 1 2 3 python search.invoke("what is the weather in SF") search.invoke( "what is the weather in SF" ) 1 python !pip install -U faiss-cpu tiktoken --quiet ! pip install - U faiss - cpu tiktoken -- quiet 1 python from langchain.text_splitter import RecursiveCharacterTextSplitter from langchain.text_splitter import RecursiveCharacterTextSplitter from langchain_community.document_loaders import WebBaseLoader from langchain_community.document_loaders import WebBaseLoader from langchain_community.vectorstores import FAISS from langchain_community.vectorstores import FAISS from langchain_openai import OpenAIEmbeddings from langchain_openai import OpenAIEmbeddings loader = WebBaseLoader("https://docs.smith.langchain.com/overview") loader = WebBaseLoader( "https://docs.smith.langchain.com/overview" ) docs = loader.load() docs = loader.load() documents = RecursiveCharacterTextSplitter( documents = RecursiveCharacterTextSplitter( chunk_size=1000, chunk_overlap=200 chunk_size = 1000 , chunk_overlap = 200 ).split_documents(docs) ).split_documents(docs) vector = FAISS.from_documents(documents, OpenAIEmbeddings()) vector = FAISS .from_documents(documents, OpenAIEmbeddings()) retriever = vector.as_retriever() retriever = vector.as_retriever() 1 2 3 4 5 6 7 8 9 10 11 12 python retriever.get_relevant_documents("how to upload a dataset") retriever.get_relevant_documents( "how to upload a dataset" ) 1 python from langchain.tools.retriever import create_retriever_tool from langchain.tools.retriever import create_retriever_tool retriever_tool = create_retriever_tool( retriever_tool = create_retriever_tool( retriever=retriever, retriever = retriever, name="langsmith_search", name = "langsmith_search" , description="Search for information about LangSmith. For any questions about LangSmith, you must use this tool!", description = "Search for information about LangSmith. For any questions about LangSmith, you must use this tool!" , ) ) 1 2 3 4 5 6 7 python tools = [search, retriever_tool] tools = [search, retriever_tool] 1 python [ [ SystemMessagePromptTemplate( SystemMessagePromptTemplate( prompt=PromptTemplate(input_variables=[], template='You are a helpful assistant')), prompt = PromptTemplate( input_variables = [], template = 'You are a helpful assistant' )), MessagesPlaceholder( MessagesPlaceholder( variable_name='chat_history', optional=True), variable_name = 'chat_history' , optional = True ), HumanMessagePromptTemplate( HumanMessagePromptTemplate( prompt=PromptTemplate(input_variables=['input'], template='{input}')), prompt = PromptTemplate( input_variables = [ 'input' ], template = ' {input} ' )), MessagesPlaceholder(variable_name='agent_scratchpad') MessagesPlaceholder( variable_name = 'agent_scratchpad' ) ] ] 1 2 3 4 5 6 7 8 9 python from langchain import hub from langchain import hub prompt = hub.pull("hwchase17/openai-functions-agent") prompt = hub.pull( "hwchase17/openai-functions-agent" ) 1 2 3 python from langchain_openai import ChatOpenAI from langchain_openai import ChatOpenAI llm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0) llm = ChatOpenAI( model = "gpt-3.5-turbo" , temperature = 0 ) 1 2 3 python from langchain.agents import create_openai_tools_agent from langchain.agents import create_openai_tools_agent agent = create_openai_tools_agent(llm, tools, prompt) agent = create_openai_tools_agent(llm, tools, prompt) 1 2 3 python from langchain.agents import AgentExecutor from langchain.agents import AgentExecutor agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True) agent_executor = AgentExecutor( agent = agent, tools = tools, verbose = True ) 1 2 3 python agent_executor.invoke({"input": "hi!"}) agent_executor.invoke({ "input" : "hi!" }) 1 > Entering new AgentExecutor chain... > Entering new AgentExecutor chain... Hello! How can I assist you today? Hello! How can I assist you today? > Finished chain. > Finished chain. {'input': 'hi!', 'output': 'Hello! How can I assist you today?'} {'input': 'hi!', 'output': 'Hello! How can I assist you today?'} 1 2 3 4 5 6 python agent_executor.invoke({"input": "whats the weather in sf?"}) agent_executor.invoke({ "input" : "whats the weather in sf?" }) 1 > Entering new AgentExecutor chain... > Entering new AgentExecutor chain... Invoking: `tavily_search_results_json` with `{'query': 'weather in San Francisco'}` Invoking: `tavily_search_results_json` with `{'query': 'weather in San Francisco'}` …… …… > Finished chain. > Finished chain. {'input': 'whats the weather in sf?', {'input': 'whats the weather in sf?', 'output': 'The weather in San Francisco is currently rainy with gusts of wind up to 45 mph. There is a strong storm expected to douse the Bay Area with 2.5 to 3 inches of rain. There is also a wind advisory in effect. Flights at San Francisco International Airport have been delayed and canceled due to the gusty winds. You can find more information about the weather in San Francisco in the following articles:\n\n1. [San Francisco Examiner - Climate](https://www.sfexaminer.com/news/climate/san-francisco-weather-forecast-calls-for-strongest-2024-rain/article_75347810-bfc3-11ee-abf6-e74c528e0583.html)\n2. [SF Standard - Gusty Winds Cause Delays at SFO, Power Outages as Powerful Storm Pounds the Bay](https://sfstandard.com/2024/02/04/gusty-winds-cause-delays-at-san-francisco-airport-power-outages-as-powerful-storm-pounds-the-bay/)\n3. [CNN - Atmospheric river brings heavy rain, flooding to California](https://www.cnn.com/2024/02/04/us/california-atmospheric-river-flooding/index.html)'} 'output': 'The weather in San Francisco is currently rainy with gusts of wind up to 45 mph. There is a strong storm expected to douse the Bay Area with 2.5 to 3 inches of rain. There is also a wind advisory in effect. Flights at San Francisco International Airport have been delayed and canceled due to the gusty winds. You can find more information about the weather in San Francisco in the following articles:\n\n1. [San Francisco Examiner - Climate](https://www.sfexaminer.com/news/climate/san-francisco-weather-forecast-calls-for-strongest-2024-rain/article_75347810-bfc3-11ee-abf6-e74c528e0583.html)\n2. [SF Standard - Gusty Winds Cause Delays at SFO, Power Outages as Powerful Storm Pounds the Bay](https://sfstandard.com/2024/02/04/gusty-winds-cause-delays-at-san-francisco-airport-power-outages-as-powerful-storm-pounds-the-bay/)\n3. [CNN - Atmospheric river brings heavy rain, flooding to California](https://www.cnn.com/2024/02/04/us/california-atmospheric-river-flooding/index.html)'} 1 2 3 4 5 6 7 8 9 10 python agent_executor.invoke({"input": "how to use LangSmith"}) agent_executor.invoke({ "input" : "how to use LangSmith" }) 1 Invoking: `langsmith_search` with `{'query': 'how to use LangSmith'}` Invoking: `langsmith_search` with `{'query': 'how to use LangSmith'}` LangSmith Overview and User Guide | LangSmith LangSmith Overview and User Guide | LangSmith …… …… > Finished chain. > Finished chain. {'input': 'how to use LangSmith', {'input': 'how to use LangSmith', 'output': "LangSmith is a tool designed to simplify the setup of reliable LLM applications. Here's a brief guide on how to use it:\n\n1. **Tracing**: At LangChain, LangSmith's tracing is always running in the background. This is achieved by setting environment variables, which are established whenever a virtual environment is launched or a bash shell is opened.\n\n2. **Editing and Adding Examples**: You can quickly edit examples and add them to datasets to expand the evaluation sets or to fine-tune a model for improved quality or reduced costs.\n\n3. **Monitoring**: LangSmith can be used to monitor your application in the same way you used it for debugging. You can log all traces, visualize latency and token usage statistics, and troubleshoot specific issues as they arise. Each run can also be assigned string tags or key-value metadata, allowing you to attach correlation ids or AB test variants, and filter runs accordingly.\n\n4. **Feedback**: It's possible to associate feedback programmatically with runs. If your application has a thumbs up/down button on it, you can use that to log feedback back to LangSmith. This can be used to track performance over time and pinpoint underperforming data points, which you can subsequently add to a dataset for future testing.\n\n5. **Exporting Datasets**: LangSmith makes it easy to curate datasets. These datasets can be exported for use in other contexts, such as for use in OpenAI Evals or fine-tuning, such as with FireworksAI.\n\nFor more detailed instructions and examples, you can refer to the LangSmith documentation."} 'output': "LangSmith is a tool designed to simplify the setup of reliable LLM applications. Here's a brief guide on how to use it:\n\n1. **Tracing**: At LangChain, LangSmith's tracing is always running in the background. This is achieved by setting environment variables, which are established whenever a virtual environment is launched or a bash shell is opened.\n\n2. **Editing and Adding Examples**: You can quickly edit examples and add them to datasets to expand the evaluation sets or to fine-tune a model for improved quality or reduced costs.\n\n3. **Monitoring**: LangSmith can be used to monitor your application in the same way you used it for debugging. You can log all traces, visualize latency and token usage statistics, and troubleshoot specific issues as they arise. Each run can also be assigned string tags or key-value metadata, allowing you to attach correlation ids or AB test variants, and filter runs accordingly.\n\n4. **Feedback**: It's possible to associate feedback programmatically with runs. If your application has a thumbs up/down button on it, you can use that to log feedback back to LangSmith. This can be used to track performance over time and pinpoint underperforming data points, which you can subsequently add to a dataset for future testing.\n\n5. **Exporting Datasets**: LangSmith makes it easy to curate datasets. These datasets can be exported for use in other contexts, such as for use in OpenAI Evals or fine-tuning, such as with FireworksAI.\n\nFor more detailed instructions and examples, you can refer to the LangSmith documentation."} 1 2 3 4 5 6 7 8 9 10 Pager 上一页 6. RAG:检索增强生成 下一页 8. Evals:评估 AI 应用质量 教程导览 1. LangChain 简介:为何需要 2. 快速启动:安装与使用 3. 用 LangChain 调用模型 4. LangChain 提示语模板 5. LCEL:方便地创建调用链 6. RAG:检索增强生成 7. Agent:让 AI 自主选择工具 8. Evals:评估 AI 应用质量 9. LangSmith 使用入门 10. 总结与下一步 在 LangChain 中,Agent 的含义指,给定一组工具,AI 模型能够根据需要决定工具。它的文档中这样写道(docs): LangChain Agent的核心思想是,使用大语言模型选择一系列要执行的动作。 在Chain中,一系列动作是硬编码在代码中的。 在Agent中,大语言模型被用作推理引擎,以确定要采取的动作及其顺序。 LangChain 的 Agent 有如下关键概念: (详见文档:https://python.langchain.com/docs/modules/agents/concepts) 接下来,让我们来通过一个示例快速了解 LangChain Agent的使用。该示例改编自 LangChain Agent QuickStart 这里包括两个工具,一是 Tavily 搜索,二是 RAG。 使用 Tavily 搜索,需要在其网站注册获得 API KEY。我们在 Notebook 中配置环境变量如下: 创建搜索工具 我们可以调用它查看效果: 部分结果: {'url': 'https://www.sfexaminer.com/news/climate/san-francisco-weather-forecast-calls-for-strongest-2024-rain/article_75347810-bfc3-11ee-abf6-e74c528e0583.html', 'content': "San Francisco is projected to receive 2.5 and 3 inches of rain, Clouser said, as well as gusts of wind up to 45 mph. One of winter's 'stronger' storms to douse San Francisco Bay Area starting Wednesday at 4 a.m. and a 24-hour wind advisory in San Francisco starting at the same time. to Sept. 30) in The City’s history, highlighted by a 10-day stretch last January in which San Francisco received 10.3Updated Feb 2, 2024 3.4 magnitude earthquake strikes near San Francisco Zoo Updated Feb 2, 2024 SF hopes to install 200 heat pumps in 200 days in at-risk homes Updated Feb 2, 2024 On the..."},... 这里,我们使用网页加载器,加载网页并进行文本嵌入后,存入 FAISS 向量库。 尝试调用: 结果: Document(page_content="dataset uploading.Once we have a dataset, how can we use it to test changes to a prompt or chain? The most basic approach is to run the chain over the data points and visualize the outputs. Despite technological advancements, there still is no substitute for looking at outputs by eye. Currently, running the chain over the data points needs to be done client-side. The LangSmith client makes it easy to pull down a dataset and then run a chain over them, logging the results to a new project associated with the dataset. From there, you can review them. We've made it easy to assign feedback to runs and mark them as correct or incorrect directly in the web app, displaying aggregate statistics for each test project.We also make it easier to evaluate these runs. To that end, we've added a set of evaluators to the open-source LangChain library. These evaluators can be specified when initiating a test run and will evaluate the results once the test run completes. If we‚Äôre being honest, most", metadata={'source': 'https://docs.smith.langchain.com/overview', 'title': 'LangSmith Overview and User Guide', 'description': '...', 'language': 'en'}),... 其中,create_retriever_tool 接受三个参数:retriever,name, description。文档见:langchain reference 我们定义一个工具集,它就是 tools 数组,以供 Agent 使用。 我们调用 LangSmith Hub上的如下模板: 它实际的模板是: 从 LangSmith Hub加载模板: 这里使用的是create_openai_tools_agent。文档见:Agent Types-OpenAI tools。 请注意如下说明(引自LangChain 文档): OpenAI API已弃用 functions,而推荐使用tools。两者之间的区别是,tools API允许一次模型请求使用多个函数调用,这可以减少响应时间。 在这里,我们设置 verbose=True,以观察 Agent 调用过程。 调用过程及结果: 调用过程及结果: 注意:逻辑上,这里应当调用 RAG 检索,但实际上不成功,多次采用 GPT-3.5-turbo、GPT-4-Turbo-preview 模型均未能奏效。仅有 GPT-4 能成功调用 RAG 检索工具,过程如下: 在本教程中,我们通过示例详细了解创建能够由 AI 模型自主选择工具的 Agent 应用模式。你可以参考 LangChain 文档中的 Agent 部分获得更多信息,同时,你可以选用 LangGraph 来创建复杂的 Agent 逻辑。 参考资料: 最新更新: 2024/11/26 Alang.AI - Make Great AI Applications Copyright © 2025