Compare

Overview

Cursor AI vs Perplexity for math Comparison in different aspects of AI services with data mining from genuine user reviews & ratings, including: ALL,Interesting,Helpfulness,Correctness. AI store is a platform of genuine user reviews,rating and AI generated contents, covering a wide range of categories including AI Image Generators, AI Chatbot & Assistant, AI Productivity Tool, AI Video Generator, AI in Healthcare, AI in Education, AI in Lifestyle, AI in Finance, AI in Business, AI in Law, AI in Travel, AI in News, AI in Entertainment, AI for Kids, AI for Elderly, AI Search Engine, AI Quadruped Robot.

Reviews Comparison

Recommended Tags

-

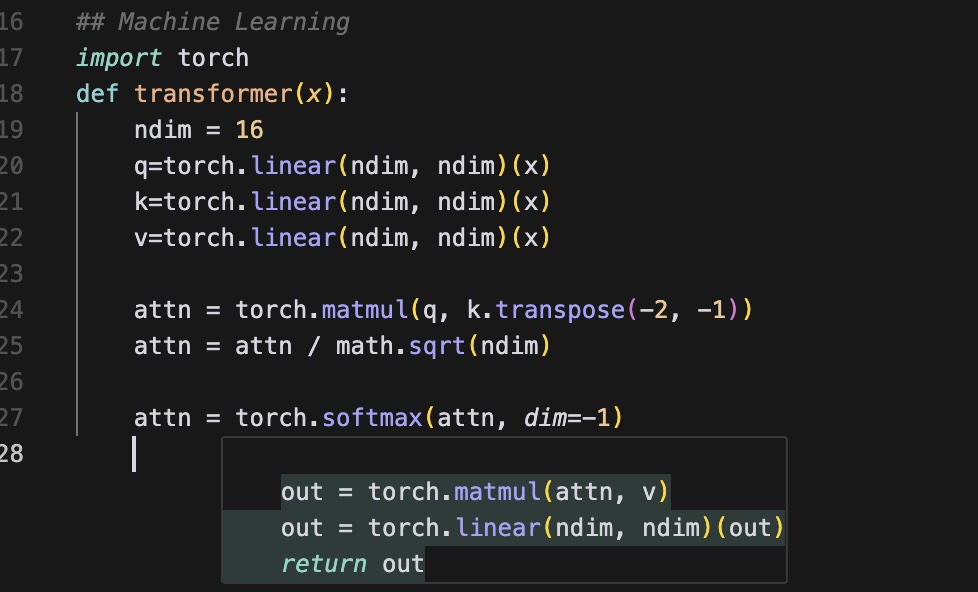

codemonkey 2024-10-31 17:12Another more complicated task I tested on Cursor Code Editor is to write a python code to implement the Transformer function as in paper "Attention is all you need". The code I am writing include function name "def transformer(x):" and some of the beginning lines like "q=torch.linear(". And with the suggested code, I wrap up the transformer function in less than one minutes. So it can really help me increase the productivity of writing code. The only drawback is when I want to modify the code and use backspace to delete some lines, it seems like Cursor doesn't know what to do and give no hints for a while. Unless I start writing something else. And the newly suggested code will usually be quite different from the previously suggested ones. ``` ## Machine Learning ### Input import torch def transformer(x): ndim = 16 q=torch.linear(ndim, ndim)(x) k=torch.linear(ndim, ndim)(x) v=torch.linear(ndim, ndim)(x) attn = torch.matmul(q, k.transpose(-2, -1)) attn = attn / math.sqrt(ndim) attn = torch.softmax(attn, dim=-1) ### Cursor Suggested Code: out = torch.matmul(attn, v) out = torch.linear(ndim, ndim)(out) return out ```

-

Community

-

We are witnessing great success in recent development of generative Artificial Intelligence in many fields, such as AI assistant, Chatbot, AI Writer. Among all the AI native products, AI Search Engine such as Perplexity, Gemini and SearchGPT are most attrative to website owners, bloggers and web content publishers. AI Search Engine is a new tool to provide answers directly to users' questions (queries). In this blog, we will give some brief introduction to basic concepts of AI Search Engine, including Large Language Models (LLM), Retrieval-Augmented Generation(RAG), Citations and Sources. Then we will highlight some majors differences between traditional Search Engine Optimization (SEO) and Generative Engine Optimization(GEO). And then we will cover some latest research and strategies to help website owners or content publishers to better optimize their content in Generative AI Search Engines.

-

Please leave your thoughts on the best and coolest AI Generated Images.

-

Please leave your thoughts on free alternatives to Midjourney Stable Diffusion and other AI Image Generators.

-

Please leave your thoughs on the most scary or creepiest AI Generated Images.

-

We are seeing more applications of robotaxi and self-driving vehicles worldwide. Many large companies such as Waymo, Tesla and Baidu are accelerating their speed of robotaxi deployment in multiple cities. Some human drivers especially cab drivers worry that they will lose their jobs due to AI. They argue that the lower operating cost and AI can work technically 24 hours a day without any rest like human will have more competing advantage than humans. What do you think?

-

Please leave your thoughts on whether human artists will be replaced by AI Image Generator. Some similar posts on other platforms including quora and reddit. Is art even worth making anymore, Will AI art eventually permanently replace human artists, Do you think AI will ever replace artists, Do people really think that replacing artists with ai is a good idea

Reply